After Covid19, one of the things separating businesses that are surviving and businesses that are failing is their ability to embrace change in customer behavior and the use of technology to turn on a dime. In the new normal, Modern IT must be able to respond to changing business situations by accelerating innovation using software.

If the last step of deployment of the software innovation pipeline is stuck, is the whole pipeline stuck? How do you ‘fail fast’ and innovate faster if you cannot deploy and validate your software changes — very fast?

You can’t.

Allow me tell you a story to set some context on why I am so excited about the possibilities that Spinnaker has for “Modernizing IT”.

The story is loosely based on real events in a modern cloud company. We were deploying and operationalizing machine learning models for production use. Early on in the journey, we had two environments — DEV/STAGING and PRODUCTION. As a company investing in building out it’s own infrastructure as code, we could easily and quickly spin up complex environments, so we knew another environment was just days away, however tight control on cloud costs kept us at two environments for the innovation phase.

Version 1 of the machine model was validated in staging with expert users and approved for production roll out. Great. We had version 1 running in production used by many (non-expert) end users, and Dev/Staging was free for future development.

Version 2 was ready in 4 weeks in DEV/STAGING environemnt, as the team iterated fast. The product team had a long backlog of innovations to make and wanted to continue to iterate on the model after releasing version 2. However, in a release meeting, the customer executive stake holder said, ‘customer is not ready to take the new version in production yet. They are blocked on another critical project’.

Well, that just blocked the whole train.

Unlike a traditional software SDLC, Machine Learning models required iterative and frequent changes to data, pipelines, model fitting, validation and model performance analysis. So, when the last mile of deployment is blocked, the whole train of innovation was blocked. We decided to hold off on release but we lost time until we were able to spin up another environment potentially eating the cost of environment as cost of doing business.

We had three main challenges:

- Per customer configurations weren’t cleanly isolated (especially configurations like hyper parameter tuning that Data Scientists had to do weren’t part of deployment configurations).

- Customer budget limitations meant spinning up clusters and keeping them running wasn’t an option unless we were to eat those costs (somehow)

- While infrastructure was automated, training, validation and performance analysis of the models was still a manual step and outside the deployment flow causing errors/misunderstandings and frustrations across operations and data science teams.

The chasm between data science teams and operational teams made it difficult to understand, share, and inform the right solutions that were operationally read (secure, compliant, scalable, supportable) without a lot of back and forth between the teams, process innovation and leadership.

That story highlights many challenges in the real world that can slow innovation down or simply just block it.

Last weekend, I discovered Spinnaker. As I learnt more about Spinnaker, I became excited about it’s capabilities and the role it could play in the last mile of application delivery to ensure innovation doesn’t slow down or stop.

Spinnaker for the Last Mile

Spinnaker is a continuous deployment innovation that Netflix open sourced several years ago but is a complex beast if you want to manage it yourself.

It is a vendor agnostic multi-cloud platform that automates continuous delivery of applications to the cloud of your choosing (Azure, AWS, GCP, Other) with high velocity and confidence.

Spinnaker defines an application as a collection of clusters which house a collection of server groups. Each server group, identifies a deployable artifact (such as a docker image, VM image, source code etc.) with its related configuration.

Having never played with GCP (I am an Azure guy), I set about getting a sample app deployed in Spinnaker and learning GCP by doing.

The tutorial to get a sample app was well designed and straight forward. The one thing that made the experience painful for me was the multiple user id’s I had with google. Each time I follow a link, GCP for some reason used my user context that was NOT the one I was working with, and thus, always failed to find my resource. I had to switch the user name to the correct one manually each time. Perhaps there is a better experience, but this was just mildly annoying — for the amazing outcomes of such a complex deployment in a short amount of time that went flawlessly. In no time, I had the latest version of Spinnaker deployed from github and proceeded to deploy the sample helloworld app.

If you are in enterprise IT, Spinnaker and emerging technologies based on Spinnaker are a must to consider to allow you to accelerate your efforts for the modernization of IT to meet accelerated changes in business.

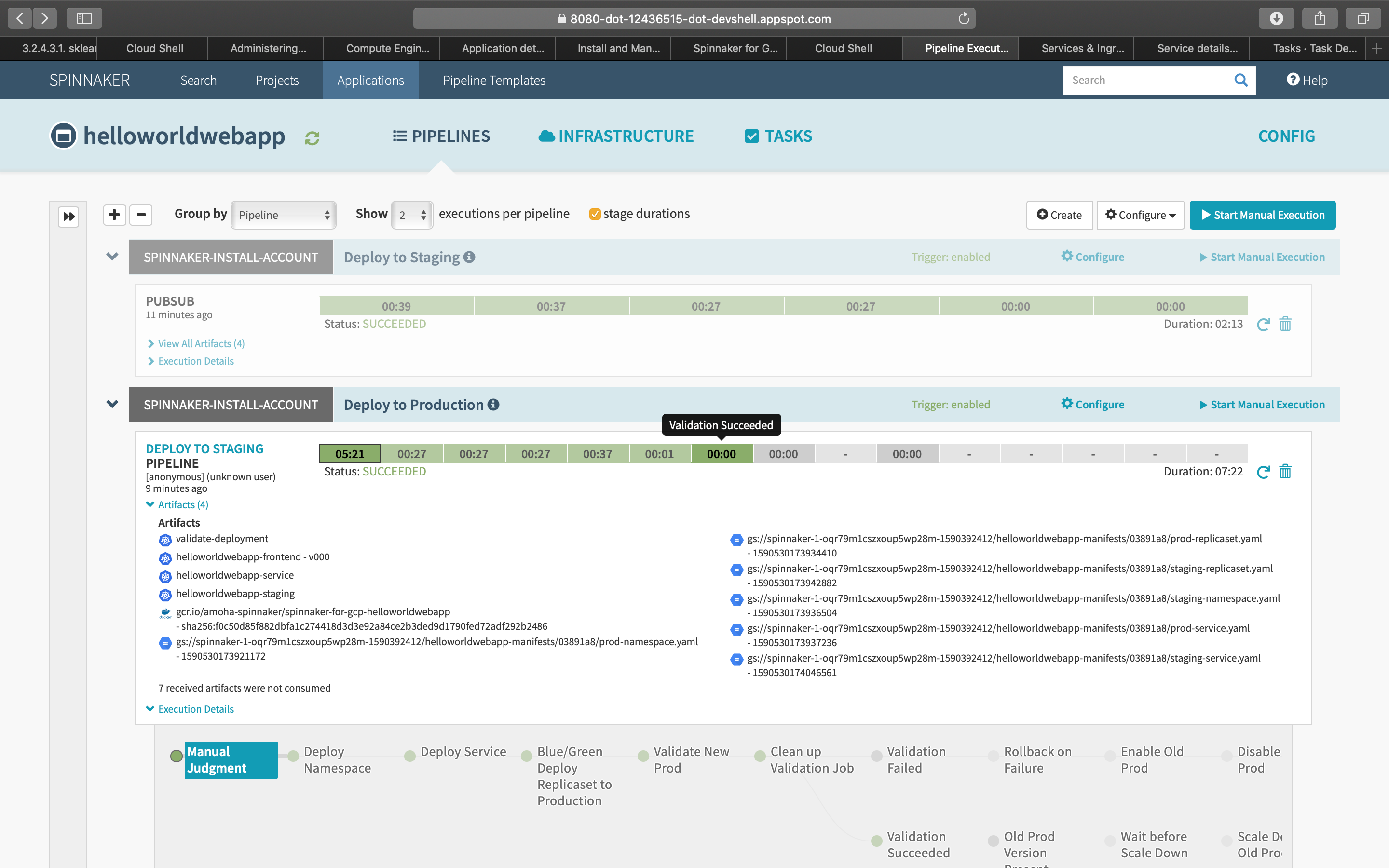

Deploying the sample application, also deploys sample pipelines (of course, you could build your own or use templates to customize). In minutes, I was able to initiate a staging deployment that is fully automated with Manual Verification steps to validate release in staging.

In an machine learning world where you want to operationalize models to production, this manual verification could be a perfect hand off / collaboration point between my data science teams and operations teams. A data scientist workflow could be integrated into a Manual Judgment step allowing the data scientists to bring his/her domain experts and ensure that model performance is meeting the goal. In industries like health care, a data science team can almost never validate a model to be ready for production use without input from domain experts to ensure patient safety is considered first. An architecture backed by Spinnaker which utilizes Kubernetes under the hood can be a blessing for data scientists, who now don’t have to care about infrastructure requests for big/small or medium data projects. All of this is automatically take care for them as part of the application deployment configurations.

Once the application is approved for a given environment, decisions to do rolling upgrades, canary analysis are available free with Spinnaker and significantly eases the operational and release burden to accelerate innovation.

While my experimentation with Spinnaker is just getting started, there is a lot more to learn and do. There are several companies investing in approaches to deliver Spinnaker to the enterprise (OpsMx, Armory, Cloudbees are just some of them)

In context of applications using predictive models, there are many other evolving approaches (eg. Seldon, AWS SageMaker to name a few) which can host and expose a model via API while providing a lot of relevant metrics for data scientists. This is an evolving space as Kubeflow integrates Seldon to support hosted models easily using Kubeflow.

Embracing change requires IT to have a smooth, efficient and well governed application lifecycle with continuous delivery not just for predictive analytics applications, but for all enterprise applications and services. While many organizations are getting really good at CI (Continuous Integration), Continuous Delivery still remains an aspiration.

Embracing change is easier said than done but post COVID19, ability to embrace change fast, is a must have to stay relevant. Until we address the last mile of the application delivery with continuous delivery, “fail-fast” may remain a myth with a constant blame game between development/engineering and IT operations. DevOps as as culture is here to stay but now we add data scientists and domain experts to the mix.

CIO’s and CTOs will need to think about what their post COVID19 IT strategy and enterprise architecture will look like where machine learning, and cloud, are becoming a defacto go-to technologies to support a remote workforce, at scale.

Will CIO’s make Modernizing IT a top priority in 2021 and will they be asking their teams, what is their approach to continuous delivery of applications?