Letters from Spark+AI

Microsoft announced several capabilities for Responsible Artificial Intelligence at #SparkAISummit. These were some awesome capabilities, but the eye catching part of the presentation for me (and likely most) was the amazing AI enabled Virtual Stage. I was envious at first that Rohan was able to be outdoors without a mask and surrounded by green grass and clouds. Then he showed us all where he really was, and that was mind blowing. All made possible by VR and AI technologies. Pretty cool, Rohan.

Responsible AI was anchored on :

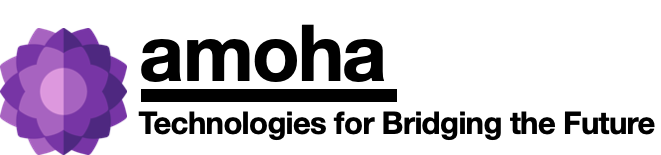

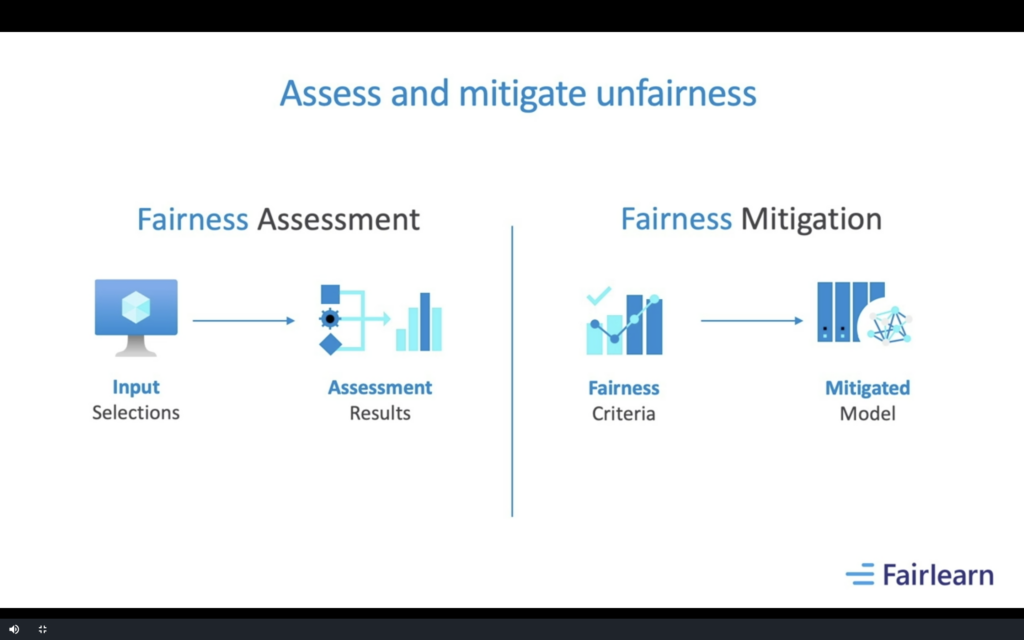

- Model Fairness

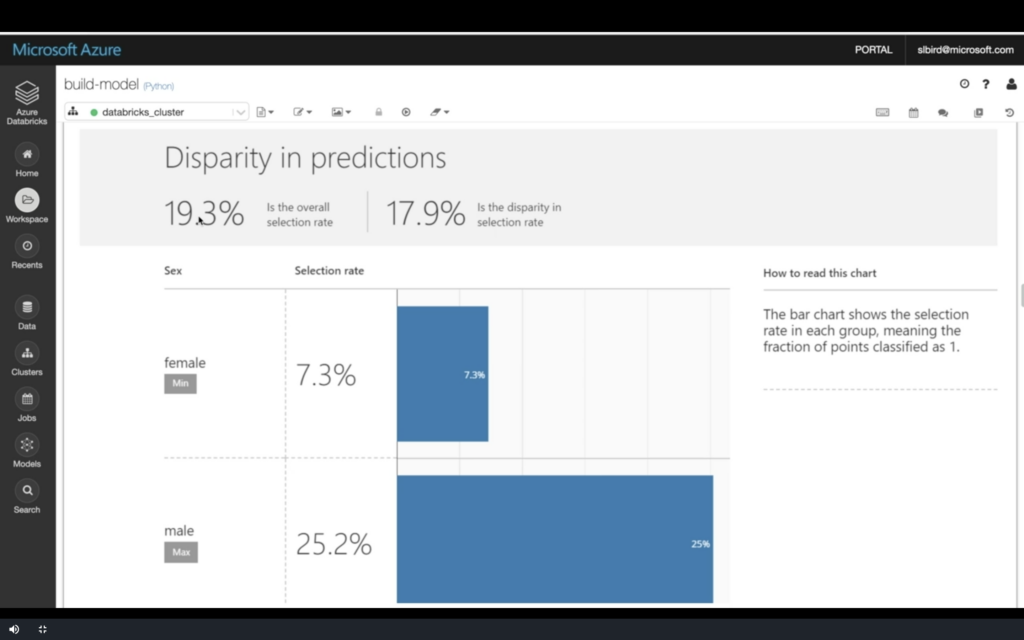

- Model Interpretability

- Audibility

- Privacy

Ensuring models aren’t biased when making life altering decisions is important. Microsoft demonstrated model fairness in loan applications approval by detecting models that were biased toward approving male applicants and easily mitigated this unfair element in the models behavior.

Model Interpretability allows us to get an insight into the predictions of the models. Why did the models, predict the way they did? For Decision Trees and other glass box models, interpretability is a bit more available than for blackbox models powered by DNN’s. However, tools such as SHAP, LIME give us some level of interpretability with black box models.

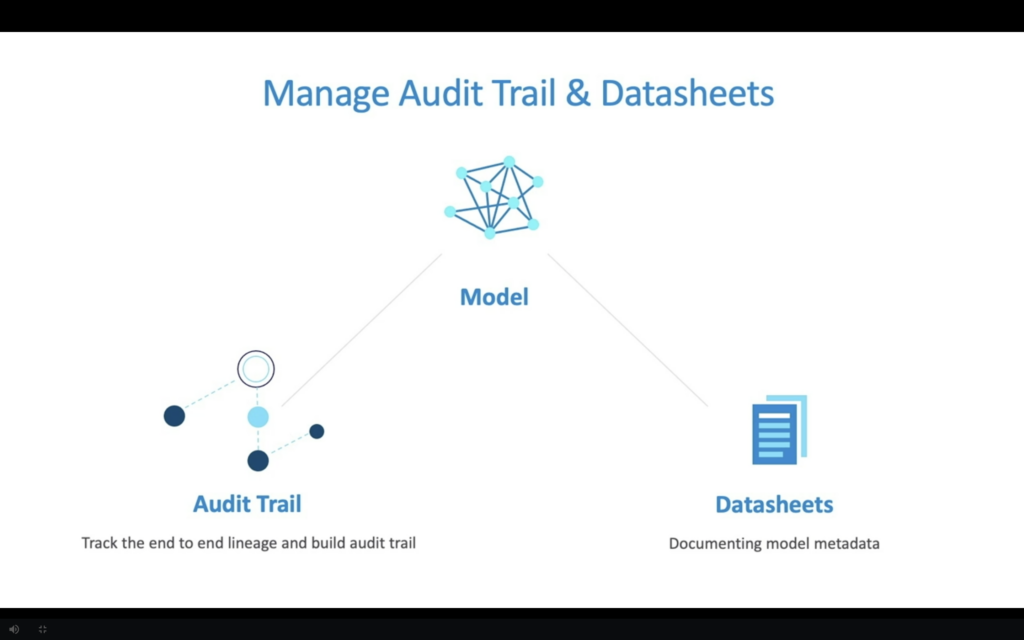

Audit trails for models across the lifecycle increase the ability of enterprises to deliver to the promise of governance. Additionally, Data Privacy during the development of models is important. Microsoft showed some pretty cool data privacy capabilities that restrict queries from users and limit PII exposure by adding statistical noise into the results.

The demo showed many of these capabilities using a loans data set.

As we embrace AI/ML in various domains, it’s important for us to not only use these technologies to compete effectively in the market place, run efficient organizations using data for decision making, but also be socially aware and responsible. This responsibility sits with all of us in the AI/ML community. #ResponsibleAI